So, your phone runs games that look better than a ten-year-old PC, your laptop crunches a million Excel cells before you finish your coffee, and every smart device in your home begs for attention. What makes all this possible? The answer is deep inside the chips, where the war between core and processing unit decides just how powerful, efficient, and snappy your gadget feels. But here’s the thing—most folks (and plenty of tech commercials) mix up these terms. If you really want to understand why a 12-core gaming rig can still lag behind a clever smartphone, or why two CPUs with the same clock speed can behave worlds apart, you need to separate myth from fact. Stick with me and you’ll see just how much magic is under the hood, and what it really means for anything built with a chip.

Basics: What Is a Core and What Is a Processing Unit?

First things first. Let’s slice through the confusion: a core and a processing unit are not the same thing. In the chip world, the word "processing unit" really means something broad. It could be a CPU (Central Processing Unit), a GPU (Graphics Processing Unit), or even something like an NPU (Neural Processing Unit) inside your latest AI-powered phone camera. Each "processing unit" is a collection of circuits specifically designed to run certain tasks in a computer system. A CPU, for example, juggles general-purpose computing (from handling your tabs in Chrome to keeping your system clock ticking), while a GPU handles highly parallel chores (like rendering thousands of game pixels at once).

Now, a core is like a tiny brain inside a processing unit. Back in the day, a CPU chip usually had one single core that did all your computer’s thinking—a lone wolf. Come the mid-2000s, chipmakers realized they could put multiple cores on the same chip, letting them work side by side. That’s why you see the word "quad-core," "octa-core," or even "16-core" plastered all over boxes and ads. Each core can process tasks independently, like if your office suddenly cloned all the best employees. But here’s the catch: the core isn’t the whole show. It’s just one part of a broader processing unit, like a chef in a much bigger kitchen. The kitchen itself (the CPU or GPU) has other helpers: memory controllers, caches, and connection circuits linking everything together.

This is where it gets interesting. A CPU (Central Processing Unit) could have four, eight, or thirty-two cores. Each core can handle its own chunk of work. But you might also have multiple CPUs in high-end servers—where each CPU has many cores. The term "processing unit" could even mean the whole physical chip, including all its cores and support circuits. And just to add another layer, a GPU might have thousands of tiny processing elements, each one a kind of "core" tailored for graphics, but very different from a CPU core. In short, a core is a worker; the processing unit is the factory or department that includes the workers and the machinery they use to get things done.

Want proof of how blurry things get? Your typical smartphone runs on a chip called a "SoC" (System on a Chip), which bundles CPU cores, GPU units, NPUs, and more—all sharing parts of the same silicon real estate. Yet, look at the spec sheet for the latest iPhone: it’ll brag about "6-core CPU, 4-core GPU." Apple is talking about cores inside processing units that all cooperate at blazing speed. It’s this architecture that lets your pocket-size gadget out-muscle many laptops from a decade ago.

And don’t forget: just because you have more cores doesn’t always mean better speed. Not all tasks can be split between cores (think of writing an email versus breaking up a movie file into parts to edit). Also, some older programs are written for single-core CPUs, so even a 32-core monster chip won’t make them faster. That’s why understanding cores inside processing units isn’t just nerd trivia—it’s the trick to picking technology that won’t let you down. We’ll get deeper into the real-world impact in a second. But first, let’s lay out the big differences where it matters.

How Cores and Processing Units Impact Performance

So what does all this mean for you when you power on a laptop or launch a graphics-heavy game? To start, having more CPU cores in your system means you can juggle more tasks without sweating. Open 50 browser tabs while your antivirus runs in the background? A multi-core processor barely notices the strain. But toss in multiple processing units—like pairing a dedicated graphics card (GPU) with your CPU—and suddenly you unlock next-level performance, since each processing unit tackles its specialty.

Take gaming. Modern 3D games lean heavily on GPUs, which are built with hundreds or thousands of tiny computing units—engineered for rendering complex visuals in parallel. The CPU (with its own set of powerful cores) orchestrates everything: handling physical world calculations, artificial intelligence, and player controls. If you upgrade only your CPU cores but leave an underpowered GPU, your frame rates might crawl. Flip the scenario—increase GPU muscle but stick with a slow, single-core CPU—and those gorgeous high-res images just bottleneck at system lag. It’s the tag-team combo of powerful CPU cores housed within a mighty processing unit, paired with a modern GPU, that delivers a buttery experience.

The core-unit distinction also defines battery life in mobile devices. Check out this true-to-life example: ARM’s "big.LITTLE" design, now used by companies like Samsung, splits CPU cores into high-power and low-power types on the same chip. When you’re scrolling through texts or emails, the system sends work to lower-power cores, sipping battery gently. Fire up a live video call or a 3D game? The chip switches to beefier, high-performance cores, keeping things smooth. Multiple cores *inside* one processing unit keep your device both fast and efficient—and most folks never notice the behind-the-scenes choreography.

But here’s a twist: efficiency isn’t just about the number of cores, but also how well each one communicates within the processing unit. That’s where the role of onboard memory cache comes in—think of it as a lightning-fast sticky note for temporary data. A good cache system prevents bottlenecks and lets each core chew through instructions without waiting for slow RAM. That’s why high-end server CPUs sometimes cost thousands—they have massive cache banks, intricate communication pathways, and more cores packed in than even the most advanced gaming PC.

Check out this comparison table:

| Device Type | No. of Cores | Processing Unit Type | Common Use |

|---|---|---|---|

| Entry Laptop | 2-4 | CPU | Everyday tasks, light browsing |

| Gaming Desktop | 6-16 CPU + 1000s GPU | CPU + GPU | High-performance gaming, 3D work |

| Smartphone | 6-8 | SoC (CPU + GPU + more) | Apps, multitasking, gaming |

| High-End Server | 32-128 | Multi-CPU | Data centers, AI, big data |

When evaluating a product, don’t get distracted by the number of cores alone. Ask what type of processing unit hosts them, which jobs it’s designed to handle, and how these cores team up. That’s the real secret to unlocking speed and power without wasting money.

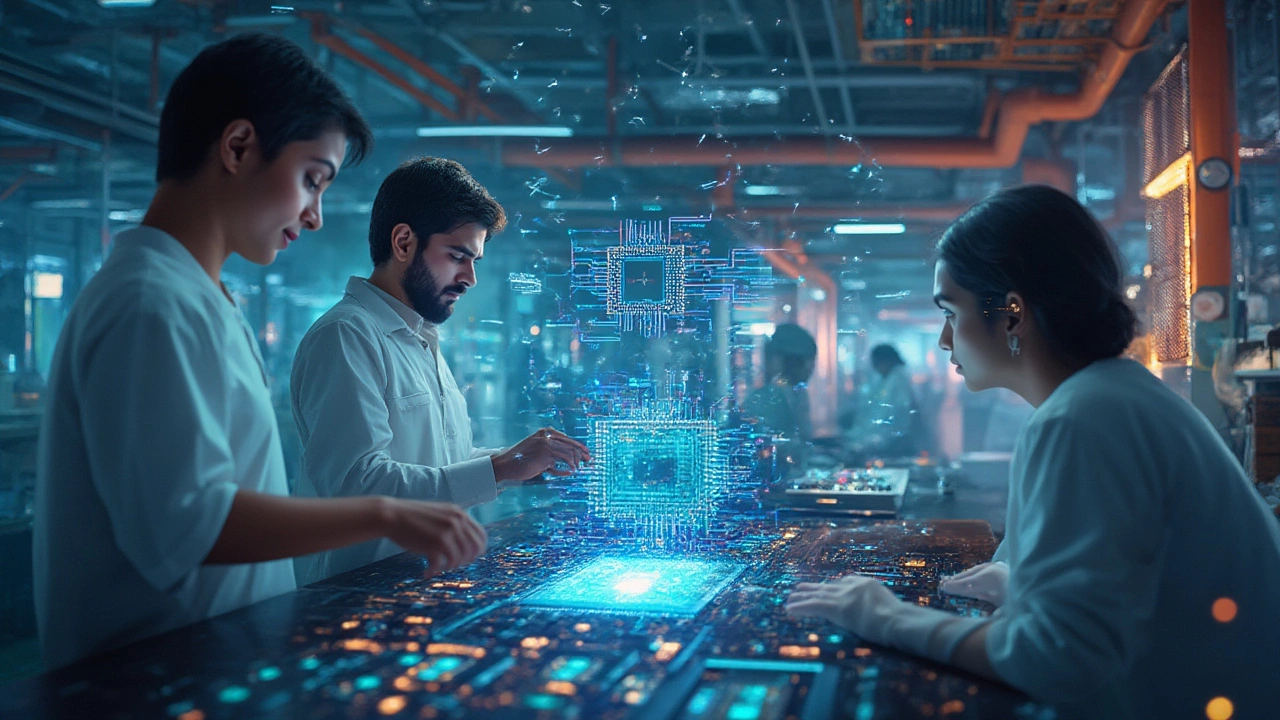

Why the Distinction Matters in Manufacturing

It’s not just phone or laptop buyers who should care about this stuff. In electronics manufacturing, knowing the difference between a core and a processing unit can make or break a product’s success. If an engineer confuses the two, they might slap in a basic multi-core CPU, thinking it can replace a specialized industrial control unit—and watch their assembly line grind to a halt. Or, a designer could over-engineer a product, wasting precious silicon and spiking costs for no performance gain. The stakes get high fast.

This trickles down all the way to the start of the manufacturing process. Let’s say you’re designing an automated quality scanner for a food processing factory. Do you really need an 8-core Intel CPU? Or would a focused ARM processor (with just 2-4 cores and a low-power GPU) be faster, cheaper, and more reliable? Choosing the right processing unit, with the proper number of right-sized cores, often means winning the contract versus fighting endless field failures.

One standout example comes from the automotive industry. Modern electric vehicles (EVs) are loaded with dozens of embedded systems—some built for power management, others for advanced driving aids. Industry researchers from MIT recently observed, "A single processing unit failure in EV control circuits can render safety features inoperative, regardless of the core count present."

A car’s computing performance depends less on having the most cores, and more on finding the right balance: the right processing units dedicated to the proper job, on a robust network inside the vehicle." — MIT Computer Science & Artificial Intelligence Lab, 2024

Manufacturers also care about heat and form factor. Jamming high-core CPUs into tiny, unventilated drones is a fire hazard—literally. Instead, they lean on processing units packed with just enough small, efficient cores to keep up, but not so beefy that things melt mid-flight. Even in pharmaceutical machinery, using NPUs and FPGAs (field-programmable gate arrays), each with their specific core counts and processing unit roles, changes how quickly and safely new drugs roll out.

And when it comes time to scale up assembly lines, say for a new smartphone launch, it’s only possible because engineers know how to balance computing needs. They choose chips with the best arrangement of cores and processing units—never just “the most on paper.” If you’re in the business of making electronics, knowing these fine lines is how you compete without waste or disaster.

Tips for Choosing the Right Technology and Understanding Specs

Alright, you’ve made it this far. So how do you use this knowledge in the real world—shopping for gear or choosing hardware for your next big project?

Start by decoding marketing hype. If a laptop shouts "10-core!" but hides that it’s a low-wattage mobile CPU, don’t expect workstation-grade power. Sometimes a 4-core, high-frequency chip with a beefy cache outperforms a 10-core low-power chip in real creative work, like editing 4K footage. Check the processing unit’s family and generation, since a single-core in a modern architecture can still outpace eight cores from a chip that’s years old.

Then, hunt down what tasks you need to run. If it’s mostly browsing, streaming, and emailing, even today’s basic CPUs have more than enough cores. For gaming or creative pro work, the combination of powerful CPU cores *and* a modern GPU (each as their own processing unit) is a must. It’s not about the biggest numbers, but about matching the workload to what each processing unit is built for.

- For office PCs: Aim for recent mid-range CPUs with 4-6 cores. More is overkill unless you know you’ll be doing heavy multitasking.

- For creative pros and gamers: Invest in the latest CPU and GPU pairing, making sure the core counts match your workflow. Certain programs and games scale well with more cores, others don’t.

- For manufacturing devices: Don’t blindly copy-paste the desktop formula. Custom hardware with specific processing units and fewer, but more efficient, cores can save heat, cash, and headaches.

- For DIY projects or startups: Research open-source platforms (like Raspberry Pi or Arduino) to experiment. These have straightforward cores and clear processing unit specs—perfect for learning and prototyping.

Finally, remember that all the magic happens in how the cores and processing units complement each other. The next time you pick up a new device, you’ll know the specs aren’t just technobabble—they’re the toolkit that shapes speed, battery life, cost, and your sanity. And if you build or buy electronics for work, it means dodging costly mistakes and making smart picks, backed by how things actually work inside the silicon.